Wireless control. Real-time data sharing. Cross-device communication.

These aren’t just tech buzzwords; they’re the core of US9965237B2. This patent is now linked to Flick Intelligence, LLC, a Non-Practicing Entity (NPE), in cases against Vuzix Corporation and Snap, Inc.

This patent outlines the interaction between handheld devices and multimedia displays, a concept increasingly seen in smart speaker interfaces and cross-device ecosystems. It enables users to select on-screen content using profile-based cursors and receive related data instantly.

In this article, we skip the legal debate. Instead, we turn to the Global Patent Search (GPS) tool to explore patents with similar design logic and technical concepts. GPS makes it easy to track down related inventions, compare features, and understand the broader tech landscape.

If you’re in IP research, product development, or competitive analysis, this is a clear, data-backed look at how related technologies stack up.

If you’re interested in another patent where pointing and camera-based interaction sparked major legal debates, explore our deep-dive into Pointwise Ventures LLC’s US8471812B2 and its related prior art analysis.

Understanding Patent US9965237B2

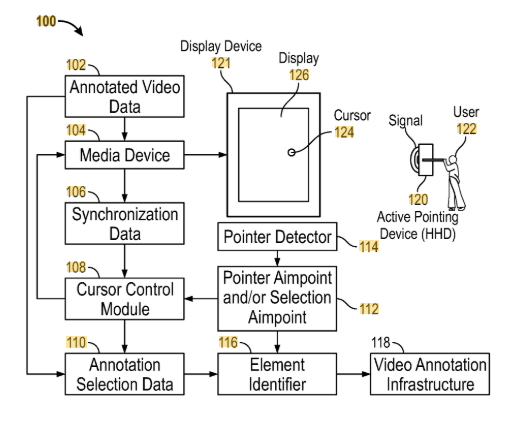

Patent US9965237B2 describes a system for enabling bidirectional communication and data sharing between wireless handheld devices (such as smartphones) and multimedia display systems (such as televisions or projection screens).

The core idea is to allow users to interact with displayed content in real-time using a cursor. It is often represented by a profile icon that can select specific elements on the screen. Once selected, supplemental data related to that content is sent to the user’s handheld device from either the display itself or a remote server.

Source: Google Patents

Its Four Key Features Are

#1. Device registration with displays: A handheld device is registered with a multimedia display via a controller to initiate interaction. This registration mechanism represents a leap from the evolution of remote control systems into dynamic, bidirectional digital interaction.

#2. Profile icon as on-screen cursor: Users can select profile icons that act as visible cursors to mark areas or elements of interest on the screen.

#3. Contextual data retrieval: When a user selects an on-screen item with the cursor, additional information is fetched from either the display or a connected database.

#4. Multiple output options for data: Retrieved information can be pushed to another screen, stored for later use, or filtered based on the user’s profile or preferences.

This technology enables seamless interaction between handheld devices and large multimedia displays. It supports real-time data delivery, personalized user engagement, and enhanced control over shared digital content.

This idea of one screen influencing another also appears in multi-display device patents, where secondary screens automatically surface related content based on user activity.

Similar Patents As US9965237B2

To explore the innovation landscape surrounding US9965237B2, we ran the patent through the Global Patent Search tool. Below is a quick glimpse of the GPS tool in action:

Source: Global Patent Search

This tool surfaces technically comparable patents by analyzing how systems communicate, synchronize, and interact across devices. Below, we highlight five references that share similar principles in mobile-display interaction, synchronized content delivery, and bidirectional communication. These examples offer a broader perspective on how related technologies have addressed the same challenges.

#1. KR100893119B1

This Korean patent, KR100893119B1, published in 2009, introduces a system for delivering synchronized interactive content from a server to a mobile device positioned near a video display. The mobile device, such as a PDA or remote control, receives data in real time that correlates with on-screen video, allowing users to tag and retrieve relevant content based on their interactions.

Below, we have added snapshots from the GPS tool highlighting the relevant snippets from the specification for the similar patents.

What This Patent Introduces To The Landscape

- Server-controlled interactive delivery: Sends interactive elements related to video content from a server to nearby mobile devices.

- Synchronized user tagging: Allows users to tag moments of interest, with tags linked back to the video timeline.

- Two-way mobile interaction: Mobile devices both receive and influence the experience by providing feedback to the server.

- Multi-protocol wireless support: Enables communication through PCS, cellular, Bluetooth, Wi-Fi, and infrared technologies.

How It Connects To US9965237B2

- Both systems allow mobile devices to interact in real time with multimedia content being displayed.

- Each uses contextual triggers (like tags or cursor selection) to deliver supplemental information.

- They emphasize wireless communication between handheld devices and larger media systems.

Why This Matters

KR100893119B1 demonstrates an early framework for mobile-synced interaction, a concept central to US9965237B2. It shows how tagging and synchronized feedback can enrich video experiences, supporting the broader trend toward personalized, bidirectional content delivery.

Related Read: Displays don’t just render; they shape depth and perception. Our Inside VDPP LLC’s Litigation Strategy: US9699444B2 and Its Related Patents review shows how multi-layer filters and bridge frames enhance motion into convincing 3D.

#2. GB2491634A

This UK patent, GB2491634A, published in 2012, introduces a dual-screen system where interactive content related to a TV broadcast is sent from a primary screen (like a TV) to a secondary device (such as a tablet or laptop). The system uses metadata embedded in the video stream to trigger and deliver interactive experiences to the second screen while the main video continues uninterrupted on the first.

What This Patent Introduces To The Landscape

- Primary and secondary terminal coordination: Synchronizes a television and a second device (tablet or laptop) via wireless communication.

- Metadata-triggered content delivery: Transfers interactive content metadata from the TV stream to the second terminal.

- Simultaneous multi-screen experience: Displays the primary video on one screen while the interactive content appears on another.

- Electronic Program Guide integration: Optionally integrates EPG data alongside interactive metadata.

How It Connects To US9965237B2

- Both patents enable real-time interaction between multiple devices during media playback.

- Each uses supplementary data (e.g., metadata or contextual selections) to control what is shown on a secondary screen.

- They support dual-screen coordination for a more dynamic and enriched media experience.

Why This Matters

GB2491634A expands on the idea of second-screen engagement, a concept echoed in US9965237B2’s ability to relay supplemental data to mobile devices. It highlights how interactive metadata can drive real-time content delivery beyond the primary display, mirroring the multi-screen, data-sharing environment envisioned in US9965237B2.

This concept of the phone acting as the central control point also shows up in US8655341B2, where the mobile device handles app installation, invitations, and service access without external systems.

#3. US20130080916A1

This US patent application, US20130080916A1, published in 2013, outlines a system for enabling handheld devices to interact with multimedia displays using cursor-based input and contextual data retrieval. It describes registering handheld devices, displaying profile icons as cursors, and delivering supplemental data based on user selections on the screen. It mirrors many core functionalities seen in interactive second-screen technologies.

What This Patent Introduces To The Landscape

- Handheld registration with multimedia displays: Wireless devices connect to displays through a controller for real-time interaction.

- Profile-based cursor interaction: Users select icons to represent cursors for navigation and selection on the display.

- Supplemental data delivery: Information is transmitted from the display or a remote source based on user selection.

- Augmented video and annotation syncing: Supports on-demand or live annotation syncing to enhance content engagement.

How It Connects To US9965237B2

- Shares the same structural elements: handheld-device registration, cursor-based selection, and content tagging.

- Involves the delivery of synchronized supplemental data tied to what’s selected on a multimedia screen.

- Uses wireless communication between handhelds and displays for interactive, bidirectional control.

Why This Matters

US20130080916A1 is closely related in both timeline and technical scope to US9965237B2. It reinforces the same architectural framework of linked handheld and display systems, with emphasis on user-driven content retrieval, bidirectional flow, and synchronized data sharing, highlighting the consistency of this concept across related filings. These systems align closely with voice-responsive media devices, where interaction is increasingly touchless and context-aware.

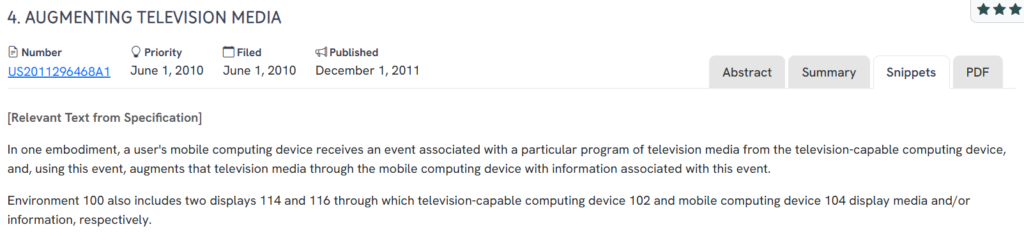

#4. US20110296468A1

This US patent application, US20110296468A1, published in 2011, presents a method for augmenting television content by using a mobile computing device to display supplemental information triggered by events occurring in a TV program. The system enables a second screen, typically a mobile device, to enrich the viewer’s experience by synchronizing additional content with the primary broadcast.

What This Patent Introduces To The Landscape

- TV-to-mobile event synchronization: Transfers event data from a TV-capable device to a mobile device to trigger augmented content.

- Dual-display environment: Supports two screens, one for the primary video content and another for supplemental information.

- Second-screen augmentation: Enhances media consumption with context-aware content displayed on a mobile computing device.

- User engagement beyond broadcast: Focuses on interactive and informational overlays tied to live or recorded television events.

How It Connects To US9965237B2

- Both systems involve delivering synchronized data from a primary display to a mobile device.

- Each uses event triggers or content selection to display supplemental media.

- They emphasize multi-screen interaction as a tool for deeper user engagement.

Why This Matters

US20110296468A1 captures a key evolution in second-screen content delivery, aligning with US9965237B2’s use of mobile interaction to enhance media experiences. It underscores the role of mobile augmentation in real-time broadcasting, reflecting a shared vision for synchronized, context-aware digital environments.

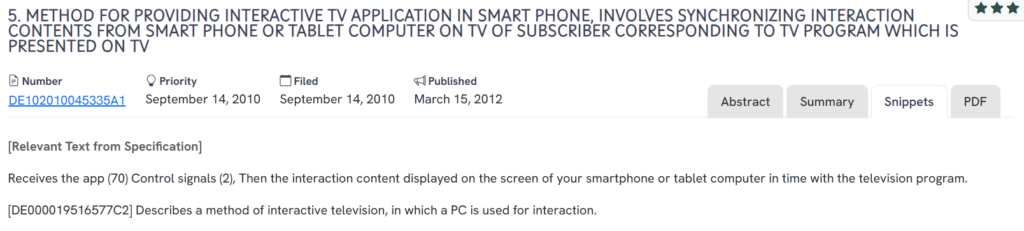

#5. DE102010045335A1

This German patent application, DE102010045335A1, published in 2012, introduces a method for synchronizing interactive TV applications between a smartphone or tablet and a subscriber’s television. The invention enables real-time interaction content to appear on a mobile device in coordination with a TV program, allowing the user to engage with synchronized digital experiences while watching live broadcasts.

What This Patent Introduces To The Landscape

- TV-smartphone synchronization: Aligns interactive content on a mobile device with what’s currently being shown on the user’s television.

- Timed content delivery: Ensures that the content presented on the smartphone matches the timing of the TV broadcast.

- Mobile-based interaction layer: Uses apps on smartphones or tablets to add an interactive overlay to the television experience.

- Bidirectional communication interface: Responds to control signals from the mobile device to influence or enhance TV content delivery.

How It Connects To US9965237B2

- Both systems emphasize synchronized interaction between mobile devices and media displays.

- Each provides a method for delivering supplemental or interactive content tied directly to what’s shown on a TV.

- They support enhanced user experiences through dual-screen coordination and real-time data flow.

Why This Matters

DE102010045335A1 reinforces the global trend of interactive second-screen applications, mirroring the vision laid out in US9965237B2. By enabling synchronized mobile engagement during live TV broadcasts, it highlights the growing importance of timed, device-linked content delivery in modern media systems.

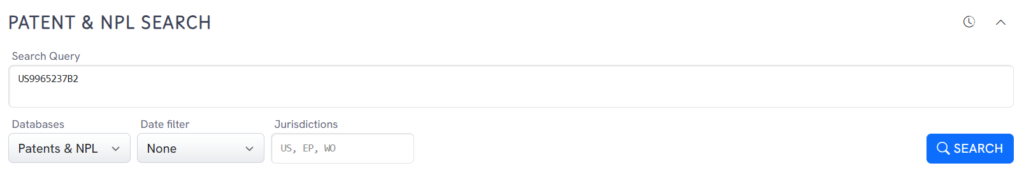

How To Find Related Patents Using Global Patent Search

Exploring the broader landscape of mobile-to-display communication is crucial when dealing with technologies such as synchronized media sharing, profile-based interaction, or second-screen content delivery. The Global Patent Search tool simplifies this process, helping users surface patents that reflect similar system architectures or interaction models.

1. Enter the patent number into GPS: Start by entering a patent number like US9965237B2 into the GPS tool. The platform instantly transforms it into a smart query, which you can refine using terms like wireless display control, profile icon cursor, or second-screen synchronization.

2. Explore conceptual snippets: Rather than line-by-line claim comparisons, GPS now highlights curated text snippets. These reveal how other systems handle bidirectional data, enable cursor-based content selection, or deliver supplemental media through handheld devices.

3. Identify related inventions: The tool surfaces patents that support dual-device coordination, on-screen tagging, or personalized content delivery, showing how others have addressed similar interaction challenges.

4. Compare systems, not legal claims: GPS focuses on the behavior of technologies, not their legal phrasing. This helps users understand functional similarities without needing to parse dense legal language.

5. Accelerate cross-domain insights: Whether you’re in user interface design, AR environments, or multimedia broadcasting, GPS reveals conceptually aligned inventions across domains that may otherwise be overlooked.

With this approach, Global Patent Search offers engineers, product teams, and IP professionals a clear, structured way to discover related technologies and shape more informed strategic decisions.

Disclaimer: The information provided in this article is for informational purposes only and should not be considered legal advice. The related patent references mentioned are preliminary results from the Global Patent Search tool and do not guarantee legal significance. For a comprehensive related patent analysis, we recommend conducting a detailed search using GPS or consulting a patent attorney.