Recording long videos is simple. The real challenge comes later, when you are trying to find the exact moment that matters. Whether it’s a goal in a game, a sudden incident on the road, or an unexpected event at home, scrolling through hours of footage is frustrating and time-consuming.

US11189321B2 offers a smart solution to this problem. It introduces a device that lets users “mark the moment” as it happens. With a quick press or an automatic trigger, the system saves the exact time of the event. Later, those marks act like bookmarks, helping users jump straight to the clips they care about without endless searching.

This makes the patent especially valuable for sports, security, and everyday recording.

Although US11189321B2 is currently part of a case between L4T Innovations LLC and Ecobee Technologies ULC, this article will focus on the technology itself.

Using the Global Patent Search (GPS) platform, we will also explore similar patents that aim to solve the same challenge in different ways.

Understanding Patent US11189321B2

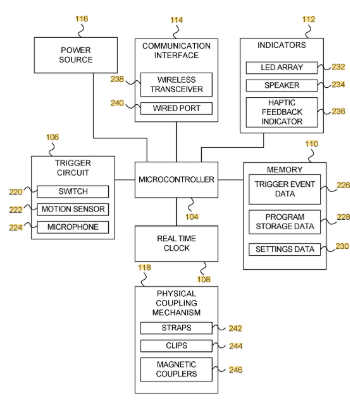

In essence, Patent US11189321B2 introduces an event marking device designed to capture the real-time occurrence of notable events. The user activates a trigger, manually or automatically, to generate a timestamp, which is then stored in memory. This data is shared with an external device, allowing it to locate or preserve the exact media linked to that moment.

Source: Google Patents

The Key Features Of This Patent Are

1. Trigger-based event marking – A user-controlled or sensor-driven trigger marks the time of an event.

2. Real-time clock integration – A built-in or external clock logs precise time data when the trigger is activated.

3. Data storage and communication – The device stores trigger data and sends it to connected devices for media tagging or saving.

4. Wearable or equipment-mounted design – The system fits into smartwatches, phones, or mounts for vehicles and gear.

Beyond these, the patent describes additional functionalities such as motion and sound-based triggers, voice command detection, haptic feedback, LED indicators, and wireless or wired connectivity. These features expand how the device can respond to different environments and user needs.

To see how motion sensors and trigger‑based recording evolved (from loop recording to incident capture), check out our article on the evolution of Dashcams.

Similar Patents To US11189321B2

To understand the technology landscape behind US11189321B2, we used the Global Patent Search platform to uncover similar patents. These references focus on real-time media tagging, event-based recording, and automated timestamp systems that enhance how video or image content is captured and retrieved.

1. CN105007442A

This Chinese patent, CN105007442A, published in 2015, introduces a method for marking moments in continuous video and image recording. The invention enables users to insert trigger-based timestamps during filming, which are then used to extract and preserve relevant media clips.

The system works by embedding time-stamped markers either in a file list or directly within the recorded content. Once the shooting ends, the software reads these markers to identify and extract specific time segments for saving. The duration of each clip is customizable based on user or system settings, and both video and image files are supported.

Below, we have added snapshots from the GPS tool displaying the relevant snippets from the specification for similar patents.

What This Patent Introduces To The Landscape

- Marker-based video and image recording for highlighting key moments

- Trigger commands that insert timestamps during live capture

- Automatic or manual activation of trigger instructions

- Options for clip extraction based on user-defined time windows

- Built-in file tagging and manifest lists for locating relevant segments

- Integration with sensors (e.g., accelerometers) for auto-triggered capture

- Location tagging is embedded with each marked event

- Support for loop recording and time-lapse photography modes

How It Connects To US11189321B2

- Both patents aim to simplify media retrieval from long-form recordings

- CN105007442A uses trigger markers and time-based clipping, similar to the timestamp logging in US11189321B2

- Each system enables users to capture only the essential moments without scanning full video files

- The technologies emphasize real-time tagging, external device support, and flexibility in user input

Why This Matters

This patent highlights the demand for smarter video recording tools that adapt to real-time events. Like US11189321B2, it shows how timestamp-based triggers can make media retrieval faster and more user-friendly, especially in high-volume or continuous recording scenarios.

2. US9224045B2

This U.S. patent US9224045B2, published in 2015, introduces a video camera system with user-driven event tagging and capture modes. It allows users to tag events in real time using physical or digital controls, triggering the system to store video content that occurs both before and after the event.

The invention includes a “video tagging mode” for marking moments during continuous recording and a “burst mode” for initiating high-quality recordings when an event is detected. Tags are stored with timestamps and metadata, helping users navigate directly to important segments during playback. The system leverages buffers to retain pre-event footage, and user interfaces display timelines with event markers for quick review.

What This Patent Introduces To The Landscape

- Real-time event tagging using physical buttons, touch screens, or voice input

- Timestamped markers embedded in video data files for quick navigation

- Use of an image buffer to capture footage before and after a trigger event

- Two camera modes: video tagging and burst capture, for flexible recording styles

- User-defined settings for how much pre- and post-event footage is stored

- Metadata-rich tagging with support for location, quality levels, and notations

- Interactive user interfaces with event timelines and preview thumbnails

- Storage of multiple tagged segments within a single media file

- Option to record at different quality levels for continuous vs. triggered capture

- Tagging support across video, images, and sequences with timeline-based playback tools

How It Connects To US11189321B2

- Both systems enable timestamped event tagging during continuous video capture

- US9224045B2 uses buffer-based memory to save pre-event data, much like retrospective triggers

- Each solution supports user or system-initiated triggers for event detection

- Both designs focus on helping users isolate important segments in long recordings

Why This Matters

This patent reinforces the value of real-time event tagging in high-volume media recording. Like US11189321B2, it offers mechanisms for identifying, preserving, and retrieving key moments with minimal effort, enabling efficient workflows for sports, content creation, and surveillance.

Did you know: Building on similar idea, US9792361B1 introduces searchable voice archives on phones, adding metadata, location and speaker context to every recording.

3. US2015269968A1

This U.S. patent application US2015269968A1, published in 2015, introduces a method for automatically detecting and tagging video events using metadata derived from audio signals. The system is designed to simplify how users find and view moments of interest, called “events”, in large collections of video content.

The invention relies on audio-based event detection. By defining audio criteria like spikes in amplitude or specific frequency thresholds, the system scans synchronized video-audio tracks to identify key moments. These are then logged using offset timestamps, precise time values relative to the beginning of the video, making it faster to retrieve scenes.

This process avoids reliance on imprecise or manually added metadata and enables more accurate tagging. A visual UI further allows users to filter, search, and jump to events across multiple video files.

What This Patent Introduces To The Landscape

- Automated detection of video events using audio waveform analysis

- Use of offset timestamps for precise localization within video files

- Techniques to align or adjust pre-existing event metadata using detected timestamps

- Algorithms to time-adjust existing event logs for greater accuracy

- Audio criteria-based event detection using amplitude or frequency analysis

- Event tagging at frame-level precision for playback efficiency

- Integration with searchable databases of video metadata

- Interactive UI for filtering, previewing, and rapidly reviewing detected events

How It Connects To US11189321B2

- Both patents focus on event-based tagging and timestamp-driven media retrieval

- US2015269968A1 uses automated audio analysis; US11189321B2 supports manual or sensor-based triggers

- Each approach creates a timeline of highlights to simplify video review

- Both emphasize user efficiency in finding moments of interest across lengthy recordings

Why This Matters

This invention demonstrates how intelligent audio analysis can drive automated event tagging in media. Much like US11189321B2, it improves access to critical video segments, but does so through passive detection rather than real-time user input, broadening the toolkit for smarter media processing.

Related Read: Just as US9549388B2 ensures smooth navigation even when networks falter, video patents like US11189321B2 focus on continuity. Both help users access the right segments without disruption.

4. TW201537978A

This Taiwanese patent TW201537978A, published in 2015, discloses a camera device and method for automatic video tagging using sensor-detected motion. When a user wearing the camera makes a significant movement, the system automatically generates a timestamp, marking the event in the video without requiring manual input.

The device includes a camera, a processor, and a motion-sensing module. When motion surpasses a defined threshold, the sensor sends data to the processor, which creates a time label. This tag is stored as metadata or in the video header. It can include the time of occurrence, duration, and even the type of motion performed, based on comparisons with stored motion patterns. These time tags streamline editing, playback, and search by isolating key moments.

What This Patent Introduces To The Landscape

- Real-time video tagging triggered by motion detected through onboard sensors

- Timestamp generation without user input during significant movement events

- Time labels stored as metadata or embedded in the video header

- Tagging of both the time and duration of an event

- Motion classification using stored motion samples

- Association of motion type data with timestamps for better event context

- Optional still image capture during detected motion events

- Continuous photo capture during time-tagged intervals

- Applicable to wearable or body-mounted camera devices

- Designed to enhance post-production and video navigation workflows

How It Connects To US11189321B2

- Both patents use event-based mechanisms to trigger timestamp creation in continuous recordings

- TW201537978A focuses on motion detection via sensors, while US11189321B2 supports manual input and device triggers

- Each solution seeks to streamline access to relevant video segments for review or editing

- Both approaches reduce the burden of scanning lengthy recordings by isolating key moments automatically

Why This Matters

This invention illustrates how sensor data can automate content tagging in real time. Like US11189321B2, it shows how integrating smart triggers into video devices makes media review faster, more accurate, and user-friendly, especially in dynamic or high-movement environments.

Related Read: See how wearable tech pioneers are leveraging sensors to detect and log health-related events, echoing the same real-time trigger mechanisms explored in US11189321B2.

How To Find Similar Patents Using Global Patent Search

When analyzing event-based media tagging systems like US11189321B2, it is important to explore similar patents. The Global Patent Search tool makes this easier by surfacing patents that focus on timestamping, sensor-triggered recording, and retrospective video capture.

Here’s how you can use it to dive deeper into the technology landscape:

1. Start with the patent number: Enter US11189321B2 into the GPS tool. You can refine your search with terms like “event timestamp,” “video tagging,” or “sensor-based trigger.”

2. Review short snippets: GPS highlights text excerpts from similar patents, making it easy to identify key overlaps in features or functionality.

3. Look for shared mechanisms: Many systems, like 321B2, use real-time triggers to mark important events within large media files. These triggers may be based on user input, sensor data, or automated detection.

4. Compare how timestamps are handled: Some patents log offset timestamps automatically using audio or motion cues. Others rely on manual input or integrated metadata systems.

5. Understand the risk of indirect overlap: In some cases, similar systems may appear in the supply chain of larger media solutions. If these systems enable unauthorized use of patented features, there could be grounds for indirect infringement. This includes contributory infringement, where a party supplies components with no substantial non-infringing use that support patent violation, even without directly infringing themselves.

By exploring Global Patent Search search results, you gain a clearer view of how different developers solve similar problems, and where overlaps, whether technical or legal, could emerge. For product teams and innovators, this is key not only for innovation strategy but also for avoiding unintentional contributions to infringement down the line.

Disclaimer: The information provided in this article is for informational purposes only and should not be considered legal advice. The related patent references mentioned are preliminary results from the Global Patent Search tool and do not guarantee legal significance. For a comprehensive related patent analysis, we recommend conducting a detailed search using GPS or consulting a patent attorney.