Smart devices are everywhere, but truly intelligent help remains surprisingly rare. You ask for assistance, and your device responds, but often in ways that miss the mark. The real challenge lies in understanding not just words, but intent.

Patent US9130900B2 addresses this gap. It describes a system that decodes the meaning behind user requests. By identifying tasks, domains, and key parameters, it connects users to answers through semantic web services.

This patent is now at the center of an infringement lawsuit between GeoSymm Ventures LLC and Pixelplex Labs LLC. Rather than focus on the legal dispute, we turned our attention to the technology itself.

It is an interesting one. We wanted to know which other patented systems aim to deliver intelligent, context-aware assistance. How do they process language, assign relevance, and generate accurate results?

To explore this space, we used the Global Patent Search (GPS) tool. It helped us find similar patents that share core features with US9130900B2. Let’s explore them.

Breaking Down Patent US9130900B2

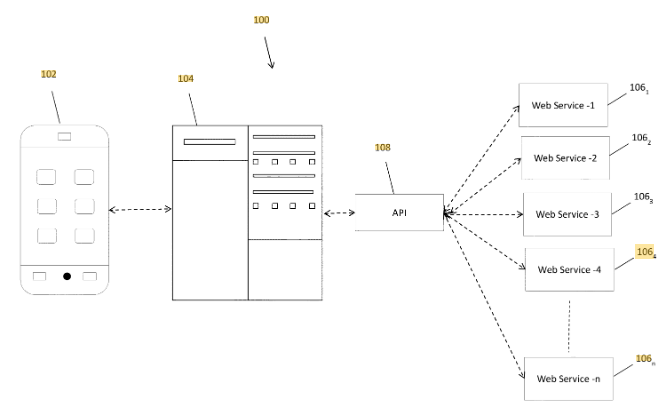

Patent US9130900B2 describes a system that helps users get assistance from their devices in a smarter way. Instead of just reacting to simple commands, the system understands what the user is asking by looking at the meaning behind the words.

It then connects to online services through APIs to find helpful answers or perform tasks like setting reminders or sending messages.

Source: Google Patents

Its Four Key Features Are

1. Semantic interpretation engine: This part of the system breaks down the user’s request to figure out the task, topic (domain), and any important details (parameters).

2. Contextual service integration: It uses APIs to connect the request with the right web service that can provide a useful result.

3. Automated multi-channel execution: The system can take action, like scheduling a meeting or sending a message, by using built-in apps or third-party services.

4. Personalized response logic: It tailors answers based on the user’s location, preferences, and past activity to give more relevant help.

The system can ask follow-up questions if the user’s request is unclear. It works with both voice and text input and supports apps like calendars, contacts, and messaging tools. It also pulls information from services like weather or maps, depending on what is needed. The system uses ontologies, structured sets of rules and categories, to better organize information. It can even work with recommendation engines to suggest options the user might like.

This technology makes digital assistants more intelligent and useful. It helps users get things done quickly, without needing to open multiple apps. The system turns everyday requests into smart, automated actions.

Recommended Read: Read about the evolution of voice assistant technology in this detailed piece we wrote a few months ago. Or save it to your to-do list.

Similar Patents to US9130900B2

To explore the technology landscape surrounding US9130900B2, we used the Global Patent Search tool to uncover related inventions. These references focus on intelligent digital assistants, voice-based interfaces, and personalized automation systems.

They showcase alternate approaches to context-aware help systems and highlight how assistants adapt to user input, device type, and communication methods.

1. US2008201306A1

This U.S. patent, US2008201306A1, published in 2008, describes a computer-based assistant that adapts to user behavior over time. It enables professionals to access contacts, calendars, and business data via speech, using mobile or landline devices. The assistant is powered by a remote server that connects to voice interfaces and data systems, delivering prioritized information based on user interaction.

Below, we have added snapshots from the GPS tool highlighting the relevant snippets from the specification for the similar patents.

What This Patent Introduces To The Landscape

- Voice-driven interface: Users interact with the assistant using spoken commands over phones or voice-enabled devices.

- Behavioral adaptation: The assistant adjusts its responses based on user habits, tone, and past interactions.

- Telephony and messaging integration: Built-in support for call handling, voicemail, and PBX systems to manage communications.

- Active scripting and customization: Uses VBScript or JScript with a COM-based model for building assistant workflows.

- Speech-to-database access: Enables querying SQL databases and enterprise systems via voice input.

- Multimodal interfaces: Supports both graphical and voice user interfaces for accessing private, corporate, and public data.

How It Connects To US9130900B2

- Both systems interpret user input and provide tailored, real-time assistance across devices.

- Each uses APIs or similar interfaces to connect with external data and communication services.

- Both enable task execution, like accessing calendars or handling messages, via voice or text commands.

- Each incorporates user behavior and preferences to improve the quality of responses over time.

Why This Matters

This patent outlines an early model for intelligent assistants that evolve with user behavior. Its focus on voice interaction, system integration, and behavioral learning parallels modern assistant technologies. Like US9130900B2, it shows how digital agents can connect multiple services and respond intelligently, forming a key part of the evolution of context-aware help systems.

Related Read: See how US11290428B2 and similar patents tackle friction in group calls with link-based access, echoing the same focus on automation and seamless digital interaction seen in smarter digital assistant patents.

2. US2007043687A1

This U.S. patent, US2007043687A1, published in 2007, describes a virtual assistant system designed to streamline daily activities by automating routine tasks. It integrates user data with external information sources and provides intelligent services such as speech recognition, message routing, and context-aware reminders across multiple devices and platforms.

What This Patent Introduces To The Landscape

- Integration of public and personal information: Retrieves data from both personal and external sources to inform assistant actions.

- Rules-based communication management: Uses a rules engine to route calls and messages based on user status, identity of the caller, and other contextual information.

- Speech-enabled interaction: Supports voice commands via interactive voice response (IVR) and converts them into assistant actions.

- Cross-platform interface support: Enables interaction through phones, instant messaging, web clients, and connected home or vehicle systems.

- Context-aware alerts and notifications: Adjusts alarms based on weather, traffic, calendar events, user location, and travel time.

- Natural language search and query handling: Processes spoken or typed queries to locate and send documents, contacts, and other files.

- Smart communication filtering: Recognizes callers or message senders and routes communications according to user-defined preferences.

- Device and environment control: Interfaces with home automation and vehicle systems to extend assistant functions to external hardware.

- Personalized user data access: Reads and updates user calendars, contacts, tasks, preferences, and files across systems.

- Web service and API integration: Pulls live data from external services and enables external control via standardized interfaces.

How It Connects To US9130900B2

- Both patents involve intelligent assistants that interpret user intent and deliver contextual services through voice or text interfaces.

- Each system supports multimodal access and integrates external services using APIs or communication frameworks.

- Both provide context-aware alerts, routing of messages, and voice-controlled command execution.

Why This Matters

This patent highlights a comprehensive virtual assistant platform that anticipates user needs and adapts responses based on real-world conditions. It emphasizes interoperability across devices, predictive scheduling, and natural interaction, all key traits of modern intelligent systems like the one described in US9130900B2.

Editor’s Note: For a deeper look at how voice capture and searchable transcripts are evolving, check our article on US9792361B1, the patent turning phones into voice-memory systems.

3. AU2021202350A1

This Australian patent, AU2021202350A1, published in 2021, describes a computer-based intelligent assistant system that maintains contextual information across user interactions. The assistant is designed to operate in a conversational manner, integrating inputs from multiple sources and using contextual memory to interpret user intent more naturally and accurately.

What This Patent Introduces To The Landscape

- Maintaining session and historical context: Stores previous user interactions within a session or over time to understand ambiguous references and streamline follow-up tasks.

- Multimodal conversational interface: Supports input through voice, text, GUI, and event-based triggers to allow seamless and intuitive interactions.

- Dynamic service orchestration: Interfaces with multiple external and local services to fulfill user requests, integrating results and adapting when APIs or services change.

- Domain-specific interpretation using active ontologies: Uses structured domain models, vocabularies, and dialog flows to map user input to specific services and minimize ambiguity accurately.

- Context-aware command execution: Interprets vague commands like “send him a message” by using current activity context (e.g., ongoing phone call) to identify the recipient.

- Personalized memory integration: Leverages long-term personal memory, such as contacts, to-dos, favorites, and prior commands, to personalize interactions.

- Real-time adaptation to external events: Automatically adjusts or initiates actions based on changes in weather, calendar, traffic, travel disruptions, or system notifications.

- Cross-platform device control: Enables users to control smartphones, smart home devices, and connected vehicle systems using voice or text commands.

- Predictive dialog assistance: Suggests next steps in tasks (e.g., reserving a table, booking a flight) based on current dialog context and known task flows.

- Unified input model: Normalizes all types of user input into a single format, making the assistant’s response mechanism agnostic to input modality.

How It Connects To US9130900B2

- Both patents describe intelligent assistants capable of maintaining conversational context and interpreting intent across multiple domains.

- Each system supports voice and text input, integrates external APIs, and uses domain-based models to reduce ambiguity.

- Both leverage personalized user data and memory to tailor interactions and streamline user requests.

Why This Matters

This patent introduces robust mechanisms for context maintenance, domain-aware interpretation, and multi-modal input handling, all critical for creating natural, human-like interactions with virtual assistants. It underscores the technological evolution from command-based systems to context-driven, dynamic assistants that can predict, personalize, and adapt to user needs in real time.

Another patent where captured images are analyzed to identify objects and return actionable results is US8471812B2, which Pointwise Ventures is asserting broadly against visual-search technologies in retail platforms.

4. US2008159491A1

This US patent, US2008159491A1, published in 2008 by Verbal World Inc., outlines a comprehensive system for managing and using information received through voice input, particularly across devices like phones and computers. It emphasizes multimodal communication, verbal interaction with web-based and calendar services, and automated task execution based on spoken commands.

What This Patent Introduces To The Landscape

- Voice-driven data entry and command execution: Enables users to input structured information, notes, emails, or commands through voice, removing the need for keyboard-based interaction.

- Multimodal interaction capabilities: Supports voice, text, video, email, images, and even file-based formats, allowing seamless interaction across different data types.

- Voice-operated calendar and reminders: Allows scheduling, reminders, and even initiating calls or sending emails based on calendar events through voice commands.

- Voice-based email functionality: Users can compose, send, receive, and sort emails entirely by voice, with support for attachments, file conversion, and audio-to-text functionality.

- Web and e-commerce interaction via voice: Enables ordering, tracking, searching, and retrieving information from websites through a voice-controlled interface.

- Automated fulfillment protocols: Supports automated workflows (menu-driven or free-form) that can respond to user voice input and trigger backend actions, such as emailing, printing, or placing an order.

- Support for mobile and telephony platforms: Designed to work across phones, cellular networks, and VoIP platforms, making it accessible in mobile and hands-free environments.

- Customizable input/output sequences: Users can define voice-based shortcuts for repetitive tasks, like generating reports or sending common messages.

- Remote action triggering: Supports voice-activated commands that can control services or devices at remote locations (e.g., printing a file at a print shop).

- Retail integration with voice input: Can detect shopping-related queries and trigger purchase workflows or suggestions using voice alone.

How It Connects To US9130900B2

- Both patents focus on voice-based control and natural interaction between users and computing systems.

- US2008159491A1 provides a more service-oriented voice interface with a strong focus on calendar, email, and e-commerce integration, whereas US9130900B2 is more centered around domain-driven dialogue and task orchestration.

- Both support voice-driven task execution, context-based actions, and integration with external services like email, calendars, and web APIs.

Why This Matters

This patent demonstrates an early, broad vision for voice-first computing, pushing past simple voice commands to support complete end-to-end workflows using speech. It anticipates the convergence of voice, mobile, and web, enabling rich user experiences in both personal and enterprise contexts. Its modular and extensible design influenced the development of multimodal assistants and voice-based productivity tools.

Systems that try to infer intent rather than react to keywords have evolved steadily, as seen in US9639608B2 , which explores early semantic reasoning in digital assistants.

How to Find Related Patents Using Global Patent Search

Exploring how other inventions work is useful when studying systems like US9130900B2. This patent deals with intelligent assistants that understand voice commands and take action for the user. The Global Patent Search tool makes it easy to find similar inventions and study how they work. Tools like AI-powered patent search platforms can also help surface relevant patents based on shared features.

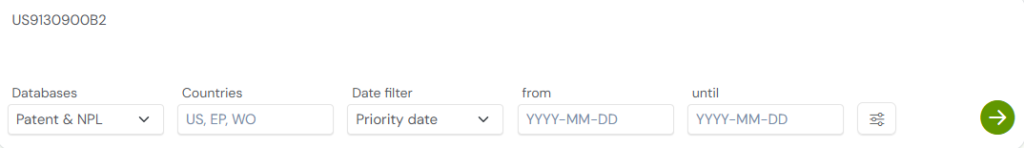

1. Enter the patent number into GPS: Start by typing patent number US9130900B2 into the GPS tool. You can also add terms like “voice command,” “context handling,” or “assistant response.”

Source: GPS

2. Use text snippets to learn faster: GPS shows short snippets from other patents. These explain how each system understands and responds to the user.

3. Find inventions with similar features: Many results include systems that track past interactions. Some also manage calendars, apps, and reminders.

4. Compare how they solve problems: You will see how each system deals with vague commands. Some ask follow-up questions. Others use past input to decide what the user meant.

5. Watch for trends in assistant technology: GPS helps spot ideas that appear often, like managing tasks, using memory, or handling more than one request at a time.

Use Global Patent Search to compare ideas, track innovation trends, and understand how systems evolve. Whether you are designing new products or researching technology, GPS helps you make better decisions. A strong patent prosecution strategy helps businesses protect Intellectual property. Try it to uncover patterns, spot new directions, and get inspired by how others solve similar problems.

Disclaimer: The information provided in this article is for informational purposes only and should not be considered legal advice. The related patent references mentioned are preliminary results from the Global Patent Search tool and do not guarantee legal significance. For a comprehensive related patent analysis, we recommend conducting a detailed search using GPS or consulting a patent attorney.